tl;dr: Netem, used in Mininet for packet delaying, interplays with TSQ.

Introduction

Multipath TCP is a recent TCP evolution which enables the usage of multiple paths for a single logical Multipath TCP connection. During our work on flexible Multipath TCP scheduling, we used Mininet and the Linux Kernel Multipath TCP implementation for development and systematic evaluations. We chose Mininet, as it is convenient to run a real network stack in user defined topologies. Mininet is widely used in current efforts to reproduce network research results. We were surprised when our Mininet experiments and real-world experiments showed significantly different behavior.

A Simple Example

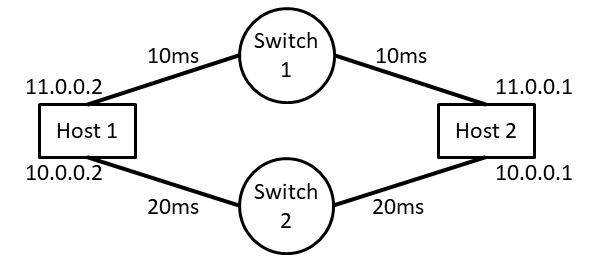

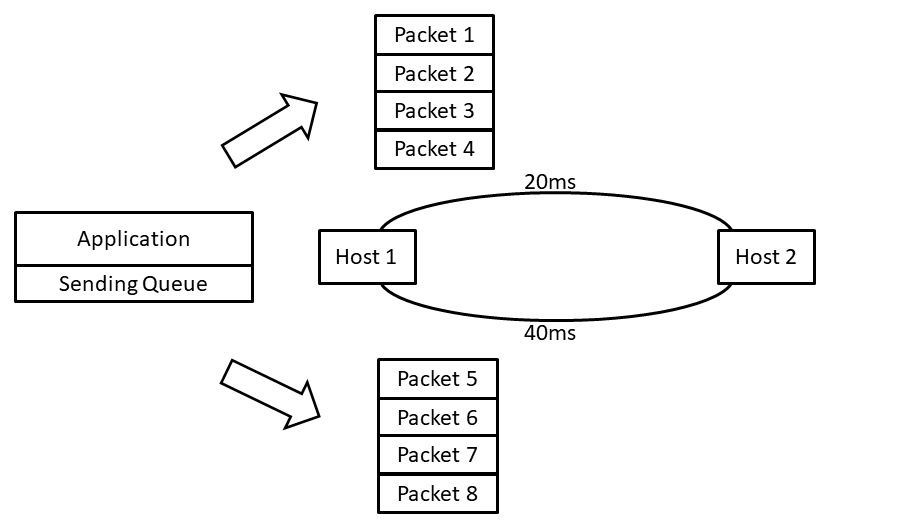

For a basic MPTCP measurement example, let's consider the following topology with two paths (Figure 1). The first path has a round-trip time of 40ms, the second path has a round-trip time of 80ms.

The topology in Figure 1 can be easily specified with the Mininet Python API as follows:

class StaticTopo(Topo):

def build(self):

h1 = self.addHost('h1')

h2 = self.addHost('h2')

/* first path */

s1 = self.addSwitch('s1')

self.addLink(h1, s1, bw=100, delay="10ms")

self.addLink(h2, s1, bw=100, delay="10ms")

/* second path */

s2 = self.addSwitch('s2')

self.addLink(h1, s2, bw=100, delay="20ms")

self.addLink(h2, s2, bw=100, delay="20ms")

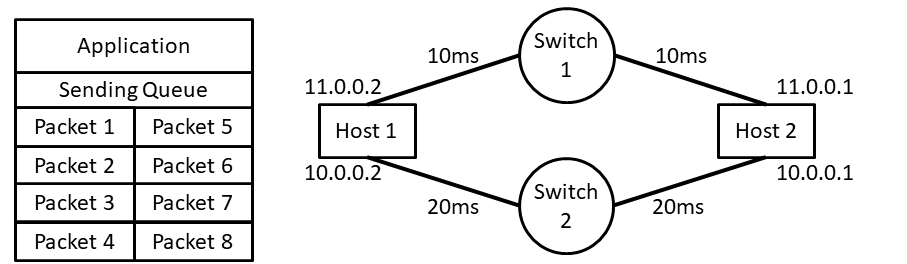

Now, let's run an application on top of this topology. Our example application starts with a short handshake and eventually sends 10kb of data. At this point, Multipath TCP managed to establish one subflow per path, so we have two subflows available. In a typical Internet setup, 10kb of data require roughly 8 packets. Thus, MPTCP has to schedule 8 packets on two subflows, and both subflows have an initial congestion window of 10 packets.

Mininet Evaluation

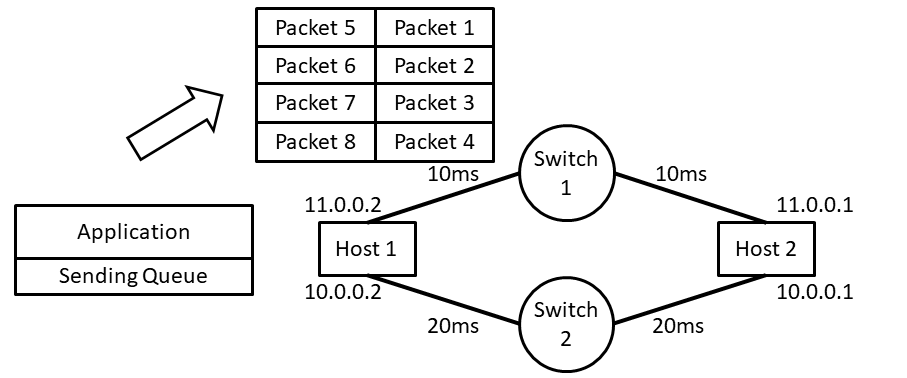

Using Mininet and this (simplified) example application, we found that the default Multipath TCP scheduler schedules all 8 packets on the lowest round-trip time subflow (Figure 3 and 4).

Real-World Evaluation

When we used the same application in a comparable real-world setup, we experienced different behavior. In the real-world measurements, packets were distributed over multiple paths, although the round-trip times showed the same differences as in our emulation setup (Figure 5).

We repeated the experiment multiple times, showing reproducible results. So why do our Mininet experiments and the real-world measurements show significantly different behavior?

Explanation

Digging into the Multipath TCP implementation, we found that the scheduler stops using the first path due to TCP small queues (TSQ). TSQ is an effort to reduce queue sizes in the network stack. Large queues in the network stack are known to increase latency due to queuing delays. During the development of Multipath TCP, this commit was added to avoid sending packets on subflows which are considered throttled by TSQ. For many application scenarios, this is a reasonable design decision for Multipath TCP.

/* If TSQ is already throttling us, do not send on this subflow. When

* TSQ gets cleared the subflow becomes eligible again.

*/

if (test_bit(TSQ_THROTTLED, &tp->tsq_flags))

return 0; But why don't we see this behavior in our Mininet emulation?

This requires a more detailed analysis of the interactions of Mininet, TSQ and Multipath TCP. Mininet relies on netem for delay emulation. Checking the implementation of netem, we found that packets which are delayed by netem are not considered queued by TSQ.

/* If a delay is expected, orphan the skb. (orphaning usually takes

* place at TX completion time, so _before_ the link transit delay)

*/

if (q->latency || q->jitter || q->rate)

skb_orphan_partial(skb);

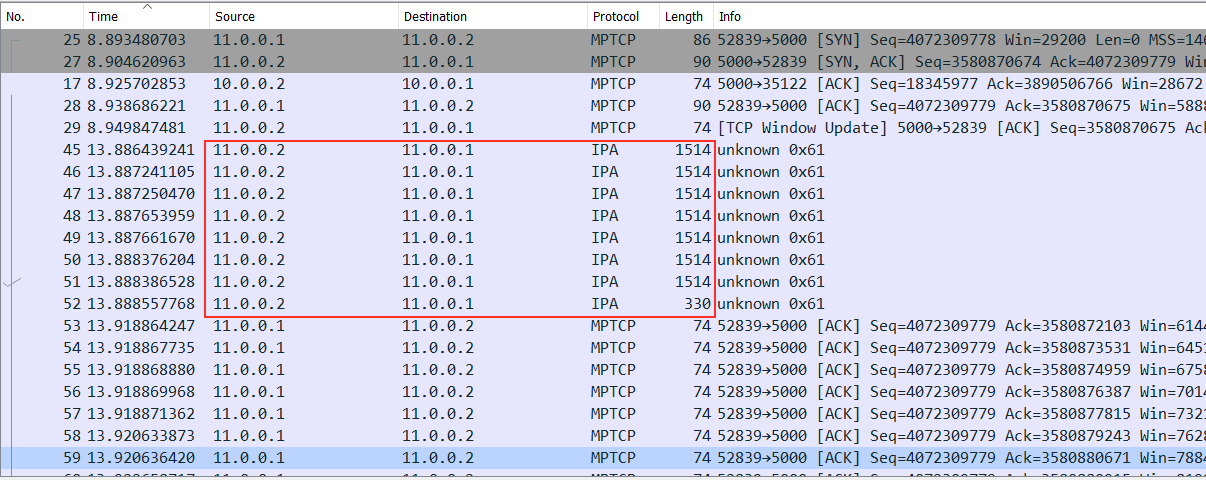

Thus, Multipath TCP never experiences a TSQ throttled subflow in Mininet setups with delayed links at the sender. To substantiate our claim, we repeated the previous Mininet emulation with a slightly modified topology. In this topology, the first link at the sender does not use netem.

class StaticTopo(Topo):

def build(self):

h1 = self.addHost('h1')

h2 = self.addHost('h2')

/* first path */

s1 = self.addSwitch('s1')

self.addLink(h1, s1, bw=100)

self.addLink(h2, s1, bw=100, delay="20ms")

/* second path */

s2 = self.addSwitch('s2')

self.addLink(h1, s2, bw=100)

self.addLink(h2, s2, bw=100, delay="40ms")

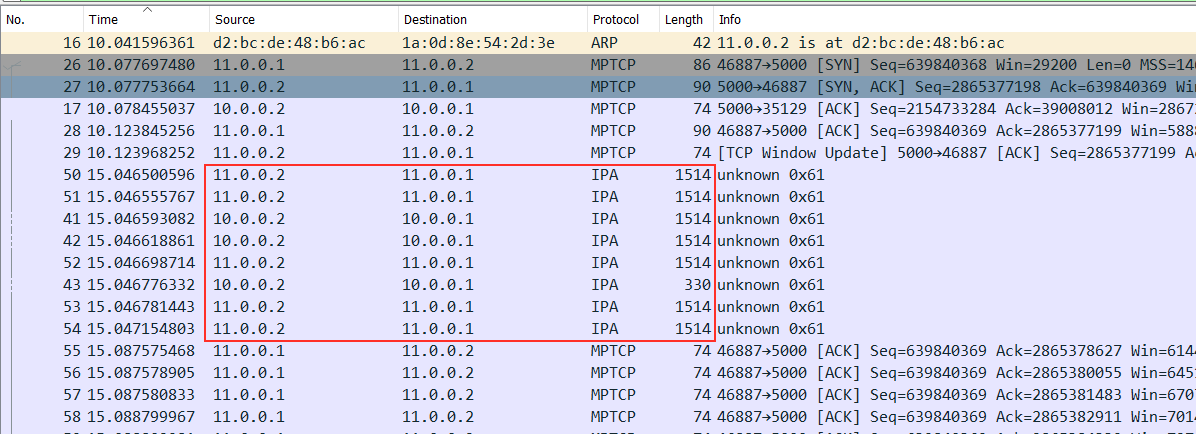

The wireshark trace in Figure 6 shows the expected behavior, which is comparable to our real-world measurements.

Conclusion

Our experiments show that we have to be careful when transferring emulation results to real-world applications. Evaluation results should always be (at least) supported by real-world measurements. We are still convinced that Mininet is great for network experiments and reproducible network research, but we feel confirmed that sanity checks are essential.

Did we misuse Mininet? As our results show that our initial Mininet setup does not reproduce real-world situations, we probably used it wrongly. However, we just followed established online examples here, so chances are high that others will fall into the same pitfalls as well. Note that this problem is not specific to Mininet, but might appear in comparable netem setups as well.

Are there more pitfalls? Yes. We are preparing some more posts. If you struggled with Mininet pitfalls, feel free to contact us, we might prepare a collection :-)

Talking about MPTCP. How can I get the behavior MPTCP showed in Mininet in the real-world? There are a lot of options. You might change the queue sizes considered by TSQ or change the MPTCP scheduler, e.g., using ProgMP.

Technical Report

This experience report is available as technical report. Please use the following citation in scientific papers.

@article{froemmgen2017mininet,

title={{Mininet /Netem Emulation Pitfalls: A Multipath TCP Scheduling Experience}},

author={Fr\"ommgen, Alexander},

year={2017},

howpublished="https://progmp.net/mininetPitfalls.html"

}

Acknowledgments

We would like to thank the Mininet Mailing list replier, who supported our analysis.

This work has been funded by MAKI to make the Internet more adaptive.

Contact

This work is presented by Max Weller, Amr Rizk and Alexander Frömmgen. Feel free to contact Alexander Frömmgen for comments and questions.